Targeted advertising on children is not new. In recent years, however, this kind of advertising has grown more potent than ever. Developments in hyperpersonalized marketing have raised new concerns over youth mental health. Advancements in artificial intelligence and the way we collect user data mean that advertising has gotten far more personal.

Billboards featuring McDonald's Happy Meals have given way to addictive algorithms that promote poor mental health, disordered eating behaviors, and unhealthy sleeping habits. Every year, social media companies earn billions of dollars in ad revenue from children. In the United States alone, six social media platforms made $11 billion in 2022 from selling ads to users under 18.

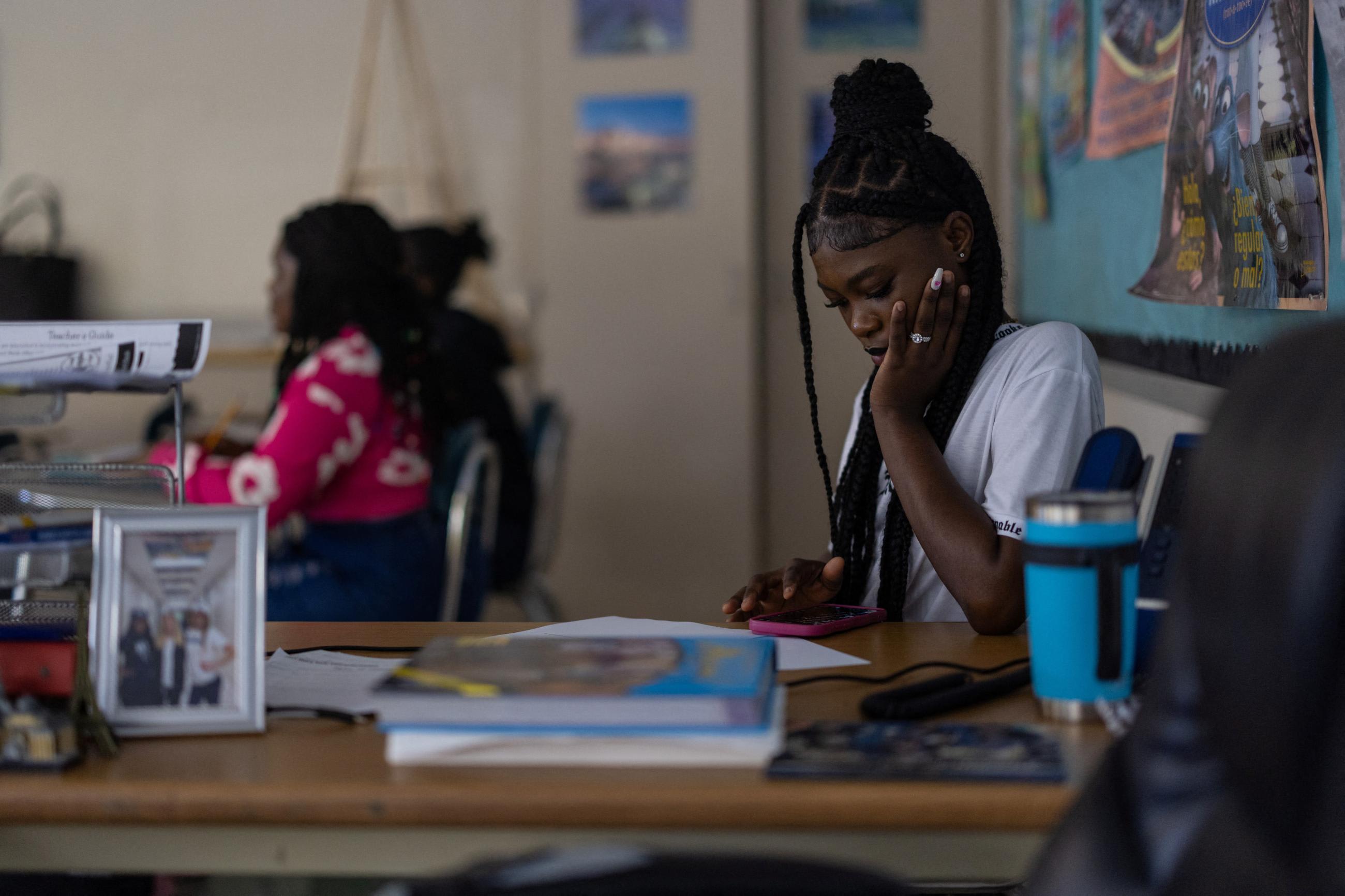

Nowhere are the hidden costs of targeted advertising on minors more apparent than on social media. These platforms collect mounds of data that enable companies to piece together users' identities, insecurities, and purchasing behaviors. Teens regularly use social media for entertainment, social connection, and even a source for current events. For instance, three-quarters of teens report using YouTube daily, and social media writ large is a regular fixture of young life in the twenty-first century. Around the world, as the reach of social media expands, so must the guardrails that protect children.

This past year, Louisiana joined just a handful of states in passing historic protections for children's data—and high school students led the way with the help of State Representative Kim Carver.

House Bill 577, which prohibits social media companies from collecting data to use for targeting advertising to minors, passed unanimously in the state legislature. As a member of the Louisiana Youth Advisory Council, I met regularly with state legislators, provided personal testimony, and sat in on high-stakes amendment negotiations. For months, this group of young people in Louisiana worked tirelessly to ensure that this important guardrail would pass.

Around the world, as the reach of social media expands, so must the guardrails that protect children

Big tech companies caught wind of this legislation as it made its way through the legislature. As the Wall Street Journal reported, Apple lobbied heavily against stricter age verification rules for social media platforms. Legislators ultimately nixed this provision.

As social media evolves alongside the rest of the internet, policymakers should keep the safety of children at the top of their minds. Legislation is one way to create a safety net that prevents the exploitation of youth online and tackles the ballooning mental health crisis.

A Targeted Youth

The United States was the first country to implement warning labels for cigarettes, which are an effective way to prevent young people from picking up the habit of smoking. In the 1970s, Claude E. Teague, the assistant director of research at R. J. Reynolds Tobacco, worked on "new brands tailored to the youth market" and wrote a memo explaining the need to target high schools. The market for social media also relies heavily on the engagement of young users. Surgeon General Vivek Murthy's proposal for a warning label on social media is a great first step to protect children. More extensive guardrails should also be implemented.

Targeted advertisements are how platforms such as YouTube, Instagram, and TikTok generate much of their revenue. In many cases, proprietary algorithms recommend products based on user data gathered through user activity on the app or usage of other platforms. Without guardrails, this practice leaves children vulnerable to profit-driven forces. Simply put, youth mental health is not priced into the balance sheet.

What makes targeted advertising so successful relative to normal advertising is personalization. A user's online data is the key for a company to build a lifelong relationship with the consumer. Now more than ever, minors are exposed to this world of consumerism and interpersonal comparison.

Under the Children's Online Privacy Protection Rule (COPPA), companies in the United States can collect only the minimum possible data for children under the age of 13. This cutoff should be expanded to protect the mental health of adolescents because current legislation is not adequate.

Some companies state that they can self-regulate, pointing to examples such as Instagram's recent implementation of new age verification for teen users. But this movement toward self-regulation has come after backlash from parents, youth advocates, and lawmakers. For instance, Instagram now mutes notifications to teens between 10 p.m. and 7 a.m. Yet kids can still readily circumvent many of these parental controls. A 2022 survey and study found that a third of those aged 8 to 17 in the United Kingdom had an internet profile claiming to be at least 18 years old. Targeted advertising is just one piece of the social media puzzle and stronger data privacy protections offer a compelling solution.

The California Consumer Privacy Act allows users to know how their data is used and allows them to opt out of data sharing. Movements across state lines have now sparked a conversation on the national level. Federally, bills such as COPPA 2.0 and the Kids Online Safety Act (KOSA) aim to protect the online youth consumer. COPPA 2.0 expands the protections of the original act—limits on data collection—to all minors. KOSA seeks to limit social media companies by preventing these companies from promoting harmful content directed at the youth and expanding parental controls.

After passing with a monumental Senate vote, 91 to 3, in July 2024, KOSA has stalled in the House of Representatives. Some lawmakers argue that this legislation is a major violation of free speech and that this sort of guardrail could do more harm than good.

The Mental Health Stakes for Minors

Now more than ever, mental illness is weighing on the American people. In the United States, a 2023 survey of children aged 12 to 17 by the Substance Abuse and Mental Health Service Administration showed that around a fourth of these children had experienced a major depressive episode within the past year.

Mental health issues have long been on the rise, but the impact on the youth is the most pronounced

Mental health issues have long been on the rise, but the impact on the youth is the most pronounced. The Surgeon General's advisory noted that, in 2019, "one in three high school students and half of female students reported persistent feelings of sadness or hopelessness, an overall increase of 40% from 2009." Many factors contribute to this unfortunate rise. One way to account for poor mental health outcomes in children is social media usage. One cohort study published in JAMA found that "adolescents who spend more than 3 hours per day on social media may be at heightened risk for mental health problems."

Another 2018 study published in The Lancet found that "greater social media use [is] related to online harassment, poor sleep, low self-esteem, and poor body image." Social media can exacerbate real-world youth issues—–such as eating pathology, depression, social anxiety, bullying, and poor self-esteem.

The long-term effects are troubling. Targeted advertisements are used to keep users online for longer and to spend more. The content exposed through social media to the youth can amplify "preexisting vulnerabilities" in the form of anxiety, depression, and eating disorders.

As the internet evolves and becomes increasingly complex, the world must adapt—innovating new solutions to combat the ever-changing online climate. Currently, children are faced with a complicated dilemma concerning the growing need for an online presence versus their safety.

Without constructive discussion and action, the target on the younger generation remains constant and they are vulnerable to exploitation. They will bear the consequences of inaction.